EOPF Zarr Data Access with openEO and STAC

Learn how to use the EOPF Zarr data through STAC and openEO

Table of contents¶

Run this notebook interactively with all dependencies pre-installed

Introduction¶

This is a practical introduction to showcasing the interoperability of EOPF Zarr with openEO by accessing and processing EOPF Zarr products via STAC and the openEO API. OpenEO is a unified interface that makes earth observation workflows more standardised, portable, and reproducible.

Setup¶

Start importing the necessary libraries

pip install openeo-processes-dask[implementations]

pip install openeo-pg-parser-networkxfrom openeo.local import LocalConnectionDid not load machine learning processes due to missing dependencies: Install them like this: `pip install openeo-processes-dask[implementations, ml]`

# import openeo # Un-Comment once pull-requests are merged.

import rioxarray as rxr

import dask

from xcube_resampling.utils import reproject_bboxConnect to openEO¶

Connect to an openEO backend.

connection = LocalConnection("./")# Un-Comment once pull-requests are merged and deployed on EODC backend!

# connection_url = "https://openeo.eodc.eu/" # this is throwing an 504 failed to parse error message in the first .download

# connection = openeo.connect(connection_url).authenticate_oidc()Load a virtual data cube¶

First, we’ll define the extents in time and space we are interested in

bbox = [

9.669372670305636,

53.64026948239441,

9.701345402674315,

53.66341039786631,

] # ~250x250 px Northern Germany, Moorrege.

time_range = ["2025-05-01", "2025-06-01"]Define the URL to the STAC resource you want to query. And the band names - they are often specific to the STAC Catalogs used.

stac_url = "https://stac.core.eopf.eodc.eu/collections/sentinel-2-l2a"

bands = ["b02", "b08"] # bandnames are specific to the STAC CatalogsReproject the bbox to the native crs of the STAC items, so that xcube-eopf(which is used under the hood in load_stac()) does not trigger reprojection internally. See detailed behavior here.

crs_reprj = "EPSG:32632"

bbox_reprj = reproject_bbox(bbox, "EPSG:4326", crs_reprj)

spatial_extent_reprj = {

"west": bbox_reprj[0],

"south": bbox_reprj[1],

"east": bbox_reprj[2],

"north": bbox_reprj[3],

"crs": crs_reprj,

}Create a virtual data cube via the funtion load_stac() according to the chosen extents. This also includes the search on the STAC API. Data is not loaded into the working environment here.

cube = connection.load_stac(

url=stac_url,

spatial_extent=spatial_extent_reprj,

temporal_extent=time_range,

bands=bands,

)Inspect the load_stac() process visually.

cubeCreate a processing workflow¶

Apply processing. We’re calculating the NDVI. And getting a monthly mean.

red = cube.band(bands[0])

nir = cube.band(bands[1])

cube_ndvi = (nir - red) / (nir + red)

cube_mnth = cube_ndvi.reduce_dimension(dimension="time", reducer="mean")Inspect the worflow visually.

cube_mnthFinalize the workflow via .execute() for the locally executed openEO version or .download() when connected to an openeo backend. The data is not loaded into the working environment now!

Note: Currently we are using the local processing version of openEO. It is not sending any requests to an actual backend. It is converting the openEO process graph to an executable Python code. It’s interacting only with the STAC API and the data buckets.

Note: .execute() also redoes the STAC API search currently.

res = cube_mnth.execute()

dask.is_dask_collection(res) # check wether data is still lazy.TrueBut we can inspect the dimensionality of the virtual cube.

resProcess and load the data into the working environment.

res = res.compute()

dask.is_dask_collection(

res

) # now the data is loaded, it's not a dask collection anymore.Falseres # the actual values are accessible in the working environment.Plot the map.

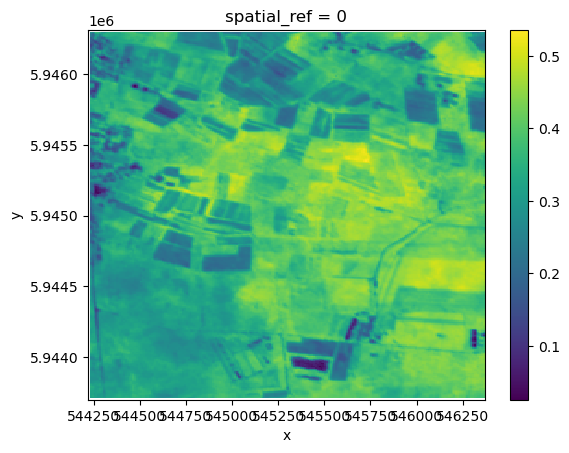

res.plot()

Compare to other sources¶

We’ll reuse the process graph we have created and only change the URL to the STAC API to use other sources of input. This is a good example of portability and reproducibility.

def load_ndvi_mean(stac_url, bbox, time_range, bands, time_dim):

cube = connection.load_stac(

url=stac_url,

spatial_extent={

"west": bbox[0],

"south": bbox[1],

"east": bbox[2],

"north": bbox[3],

},

temporal_extent=time_range,

bands=bands,

)

red = cube.band(bands[0])

nir = cube.band(bands[1])

cube_ndvi = (nir - red) / (nir + red)

cube_mnth = cube_ndvi.reduce_dimension(dimension=time_dim, reducer="mean")

return cube_mnthMS Planetary Computer¶

MS Planetary Computer is a stable source of satellite data made available via a STAC API. The Sentinel-2 L2A data has been transformed to Cloud Optimized GeoTiffs (COG) in this collection.

stac_url = "https://planetarycomputer.microsoft.com/api/stac/v1/collections/sentinel-2-l2a" # cog

bands = ["B02", "B08"]

cube_mnth = load_ndvi_mean(stac_url, bbox, time_range, bands, time_dim="time")Finalize the reused workflow via .execute(). The data is not loaded into the working environment yet.

res_mspc = cube_mnth.execute()

dask.is_dask_collection(res_mspc)TrueBut we can inspect the dimensionality of the virtual cube already. The spatial extents are slightly different than what we have received from the EOPF STAC API. This is probably due to the internal reprojection of the bbox. The data is not loaded into the working environment yet.

res_mspcNow we are processing and loading the data into the working enviornment.

res_mspc = res_mspc.compute()

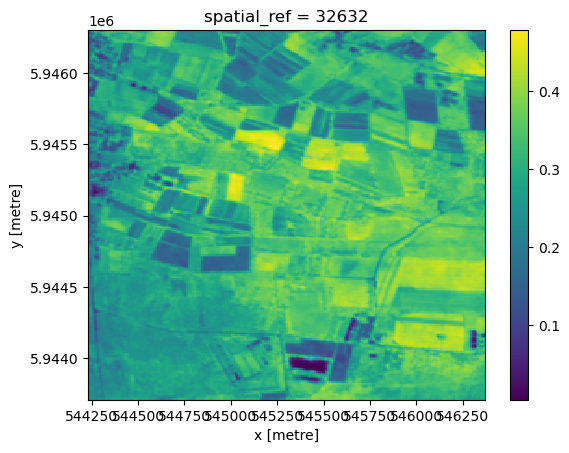

res_mspcres_mspc.plot()

Let’s check the differences of the two results. Note: Differences occur mainly due to the older processing version (2.11) of Sentinel-2 used on MS Planetary Computer.

diff = res - res_mspc

diff.to_series().describe()count 55426.000000

mean 0.055157

std 0.025907

min -0.036408

25% 0.037149

50% 0.054587

75% 0.071017

max 0.172499

dtype: float64CDSE - via openEO¶

Connect to openEO on CDSE. This also authorizes us to request data from the CDSE STAC API.

import openeoconnection_url = "openeo.dataspace.copernicus.eu"

connection = openeo.connect(connection_url).authenticate_oidc()Authenticated using device code flow.

stac_url = (

"https://stac.dataspace.copernicus.eu/v1/collections/sentinel-2-l2a/" # safe format

)

bands = ["B02", "B08"]

cube_mnth = load_ndvi_mean(stac_url, bbox, time_range, bands, time_dim="t")Since we’re connected to the CDSE openEO backend we need to download the result (only JSON can be received via .exectue()). This triggers the processing on the openEO backend as a synchronous call. To register a batch job on the backend and execute it asynchronously use .execute_batch()

res_cdse_backend = cube_mnth.download("res_cdse_backend.tif")And load it into the working environment.

res_cdse_backend = rxr.open_rasterio("res_cdse_backend.tif")Here we get the same extents as from EOPF. By loading via rioxarray the ndvi values are encoded into a band dimension.

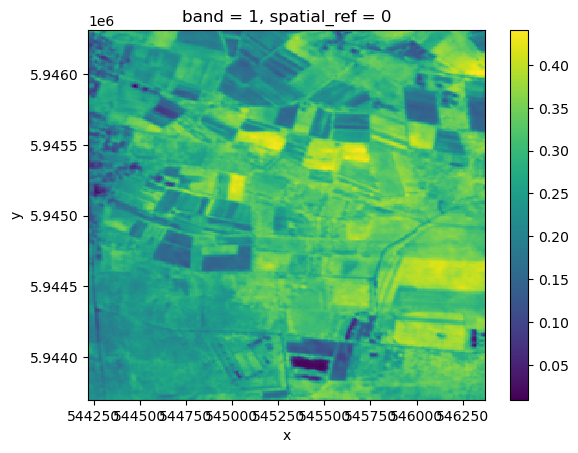

res_cdse_backendres_cdse_backend.plot()

Let’s check the differences. Very marginal, likely due to different software used in the different openEO implementations used.

diff2 = res - res_cdse_backend

diff2.to_series().describe()count 55426.000000

mean 0.078158

std 0.023538

min -0.006268

25% 0.062287

50% 0.077005

75% 0.092643

max 0.174986

dtype: float64Takeaway¶

This tutorial has shown how to:

Access EOPF Zarr Data via STAC and openEO

Apply reusable processing workflows via openEO

Compared the EOPF Zarr Data to other available Sentinel-2 sources